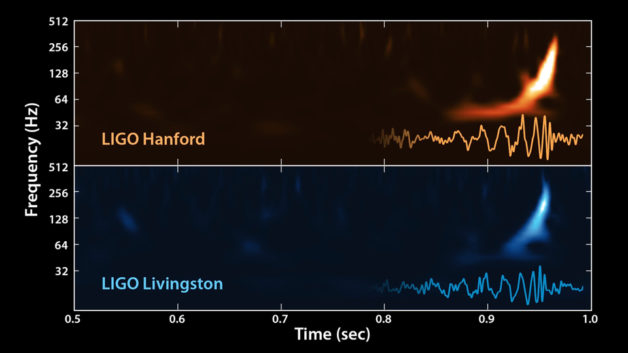

On September 14, 2015, LIGO instruments detected gravitational waves for the first time. This detection confirms a major prediction of Albert Einstein’s 1915 general theory of relativity and opens an unprecedented new window onto the cosmos. This finding also earned Barry Barish and Kip Thorne of Caltech and Rainer Weiss of MIT the 2017 Nobel Prize in Physics.

Behind the headlines was the work of two Berkeley Lab groups specializing in developing tools and applications for moving and managing data and allowing scientists around the world to access and analyze the information.

Python/Globus Tools

Back in 2004, two years before LIGO began operating at design sensitivity and 13 years before the project received the 2017 Nobel Prize in physics, programming tools developed at the U.S. Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) were used to set up an efficient system to distribute the data that would put the predictions of Albert Einstein’s General Theory of Relativity to the test. Using Python/Globus tools developed by Keith Jackson and his colleagues in the Computational Research Division’s Secure Grid Technologies Group, more than 50 terabytes of data from LIGO were replicated to nine sites on two continents, quickly and robustly.

LIGO, the Laser Interferometer Gravitational-Wave Observatory, is a facility dedicated to detecting cosmic gravitational waves – ripples in the fabric of space and time – and interpreting these waves to provide a more complete picture of the universe. Funded by the National Science Foundation, LIGO consists of two widely separated installations – one in Hanford, Washington and the other in Livingston, Louisiana – operated in unison as a single observatory. Data from LIGO would be used to test the predictions of general relativity – for example, whether gravitational waves propagate at the same speed as light, and whether the graviton particle has zero rest mass. LIGO conducts blind searches of large sections of the sky and producing an enormous quantity of data – almost 1 terabyte a day – which requires large-scale computational resources for analysis.

The LIGO Scientific Collaboration (LSC) scientists at 41 institutions worldwide need fast, reliable, and secure access to the data. To optimize access, the data sets are replicated to computer and data storage hardware at nine sites: the two observatory sites plus Caltech, MIT, Penn State, the University of Wisconsin at Milwaukee (UWM), the Max Planck Institute for Gravitation Physics/Albert Einstein Institute in Potsdam, Germany, and Cardiff University and the University of Birmingham in the UK. The LSC DataGrid uses the DOEGrids Certificate Authority operated by ESnet to issue identity certificates and service certificates.

The data distribution tool used by the LSC DataGrid is the Lightweight Data Replicator (LDR), which was developed at UWM as part of the Grid Physics Network (GriPhyN) project. LDR is built on a foundation that includes the Globus Toolkit®, Python, and pyGlobus, an interface that enables Python access to the entire Globus Toolkit. LSC DataGrid engineer Scott Koranda describes Python as the “glue to hold it all together and make it robust.”

PyGlobus is one of two Python tools developed by Jackson’s group for the Globus Toolkit, the basic software used to create computational and data grids. The pyGlobus interface or “wrapper” allows the use of the entire Globus Toolkit from Python, a high-level, interpreted programming language that is widely used in the scientific and web communities. PyGlobus is included in the current Globus Toolkit 3.2 release.

PyGlobus is one of two Python tools developed by Jackson’s group for the Globus Toolkit, the basic software used to create computational and data grids. The pyGlobus interface or “wrapper” allows the use of the entire Globus Toolkit from Python, a high-level, interpreted programming language that is widely used in the scientific and web communities. PyGlobus is included in the current Globus Toolkit 3.2 release.

“What’s great about using pyGlobus and Python is the speed and ease of development for setting up a new production grid application,” Jackson said. “The scientists spend less time programming and move on to their real work – analyzing data – faster.”

Unfortunately, Jackson was not able to see the results of his work with LIGO; he died of cancer in 2013.

Berkeley Storage Manager

When establishing a long-term partnership like a marriage, it’s traditional to have best man at the side. When the LIGO project at Caltech needed a reliable partner for moving and managing data from the project’s observatories, they also chose BeStMan, the Berkeley Storage Manager software developed by the Scientific Management Group at Lawrence Berkeley National Laboratory.

BeStMan was a full implementation of the Storage Resource Manager standard developed by the Scientific Data Management Group. Used as a data movement broker, BeStMan manages multiple file transfers without user intervention when a request for large-scale data movements of thousands of files is submitted.

The software also automatically recovers from transient failures, supports recursive directory transfer requests and verifies that enough storage space exists to accommodate file transfer requests.

BeStMan was used by both the Open Science Grid and the Earth System Grid and was deployed at nearly 60 sites, including the LIGO cluster at Caltech, the STAR project at Brookhaven National Laboratory and the U.S. ATLAS and U.S. CMS experiments at the Large Hadron Collider. The software also contributed to the work done by the Intergovernmental Panel on Climate Change, which won the Nobel Peace Prize in 2007.

Read more about how ESnet supports the LIGO scientific collaboration at “ESnet Congratulates the LIGO Visionaries on their 2017 Nobel Prize in Physics.”