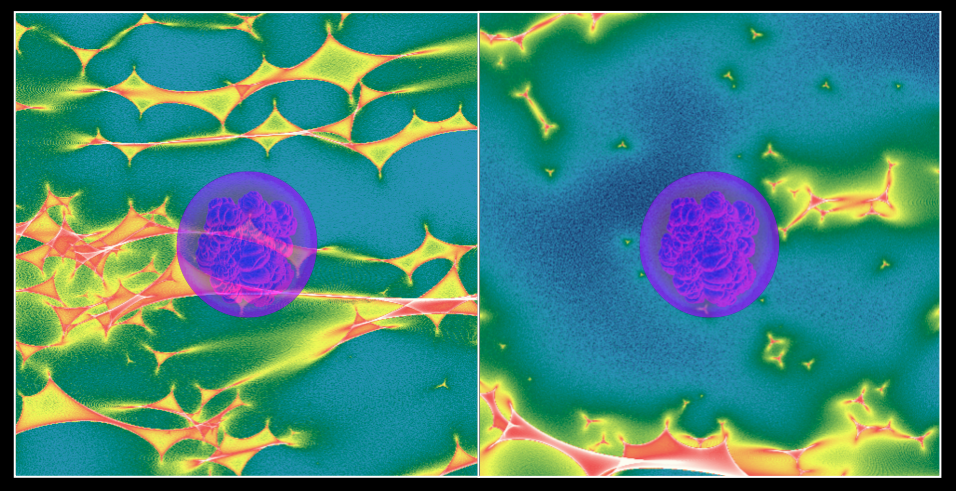

This composite of two astrophysics simulations shows a Type Ia supernova (purple disc) expanding over different microlensing magnification patterns (colored fields). Because individual stars in the lensing galaxy can significantly change the brightness of a lensed event, regions of the supernova can experience varying amounts of brightening and dimming, which scientists believed would be a problem for cosmologists measuring time delays. Using detailed computer simulations at NERSC, astrophysicists showed that this would have a small effect on time-delay cosmology. (Credit: Danny Goldstein/UC Berkeley)

In 1929 Edwin Hubble surprised many people – including Albert Einstein – when he showed that the universe is expanding. Another bombshell came in 1998 when two teams of astronomers proved that cosmic expansion is actually speeding up due to a mysterious property of space called dark energy. This discovery provided the first evidence of what is now the reigning model of the universe: “Lambda-CDM,” which says that the cosmos is approximately 70 percent dark energy, 25 percent dark matter and 5 percent “normal” matter (everything we’ve ever observed).

Until 2016, Lambda-CDM agreed beautifully with decades of cosmological data. Then a research team used the Hubble Space Telescope to make an extremely precise measurement of the local cosmic expansion rate. The result was another surprise: the researchers found that the universe was expanding a little faster than Lambda-CDM and the Cosmic Microwave Background (CMB), relic radiation from the Big Bang, predicted. So it seems something’s amiss – could this discrepancy be a systematic error, or possibly new physics?

Astrophysicists at Lawrence Berkeley National Laboratory (Berkeley Lab) and the Institute of Cosmology and Gravitation at the University of Portsmouth in the U.K. believe that strongly lensed Type Ia supernovae are the key to answering this question. And in a new Astrophysical Journal paper, they describe how to control “microlensing,” a physical effect that many scientists believed would be a major source of uncertainty facing these new cosmic probes. They also show how to identify and study these rare events in real time.

“Ever since the CMB result came out and confirmed the accelerating universe and the existence of dark matter, cosmologists have been trying to make better and better measurements of the cosmological parameters, shrink the error bars,” says Peter Nugent, an astrophysicist in Berkeley Lab’s Computational Cosmology Center (C3) and co-author on the paper. “The error bars are now so small that we should be able to say ‘this and this agree,’ so the results presented in 2016 introduced a big tension in cosmology. Our paper presents a path forward for determining whether the current disagreement is real or whether it’s a mistake.”

Better Distance Markers Shed Brighter Light on Cosmic History

The farther away an object is in space, the longer its light takes to reach Earth. So the farther out we look, the further back in time we see. For decades, Type Ia supernovae have been exceptional distance markers because they are extraordinarily bright and similar in brightness no matter where they sit in the cosmos. By looking at these objects, scientists discovered that dark energy is propelling cosmic expansion.

But last year an international team of researchers found an even more reliable distance marker – the first-ever strongly lensed Type Ia supernova. These events occur when the gravitational field of a massive object – like a galaxy – bends and refocuses passing light from a Type Ia event behind it. This “gravitational lensing” causes the supernova’s light to appear brighter and sometimes in multiple locations, if the light rays travel different paths around the massive object.

Because different routes around the massive object are longer than others, light from different images of the same Type Ia event will arrive at different times. By tracking time-delay between the strongly lensed images, astrophysicists believe they can get a very precise measurement of the cosmic expansion rate.

“Strongly lensed supernovae are much rarer than conventional supernovae – they’re one in 50,000. Although this measurement was first proposed in the 1960’s, it has never been made because only two strongly lensed supernovae have been discovered to date, neither of which were amenable to time delay measurements,” says Danny Goldstein, a UC Berkeley graduate student and lead author on the new Astrophysical Journal paper.

After running a number of computationally intensive simulations of supernova light at the National Energy Research Scientific Computing Center (NERSC), a Department of Energy Office of Science User Facility located at Berkeley Lab, Goldstein and Nugent suspect that they’ll be able to find about 1,000 of these strongly lensed Type Ia supernovae in data collected by the upcoming Large Synoptic Survey Telescope (LSST) – about 20 times more than previous expectations. These results are the basis of their new paper in the Astrophysical Journal.

“With three lensed quasars – cosmic beacons emanating from massive black holes in the centers of galaxies – collaborators and I measured the expansion rate to 3.8 percent precision. We got a value higher than the CMB measurement, but we need more systems to be really sure that something is amiss with the standard model of cosmology, “ says Thomas Collett, an astrophysicist at the University of Portsmouth and a co-author on the new Astrophysical Journal paper. “It can take years to get a time delay measurement with quasars, but this work shows we can do it for supernovae in months. One thousand lensed supernovae will let us really nail down the cosmology.”

In addition to identifying these events, the NERSC simulations also helped them prove that strongly lensed Type Ia supernovae can be very accurate cosmological probes.

“When cosmologists try to measure time delays, the problem they often encounter is that individual stars in the lensing galaxy can distort the light curves of the different images of the event, making it harder to match them up,” says Goldstein. “This effect, known as ‘microlensing,’ makes it harder to measure accurate time delays, which are essential for cosmology.”

But after running their simulations, Goldstein and Nugent found microlensing did not change the colors of strongly lensed Type Ia supernova in their early phases. So researchers can subtract the unwanted effects of microlensing by working with colors instead of light curves. Once these undesirable effects are subtracted, scientists will be able to easily match the light curves and make accurate cosmological measurements.

They came to this conclusion by modeling the supernovae using the SEDONA code, which was developed with funding from two DOE Scientific Discovery through Advanced Computing (SciDAC) Institutes to calculate light curves, spectra and polarization of aspherical supernova models.

“In the early 2000s DOE funded two SciDAC projects to study supernova explosions, we basically took the output of those models and passed them through a lensing system to prove that the effects are achromatic,” says Nugent.

“The simulations give us a dazzling picture of the inner workings of a supernova, with a level of detail that we could never know otherwise,” says Daniel Kasen, an astrophysicist in Berkeley Lab’s Nuclear Science Division, and a co-author on the paper. “Advances in high performance computing are finally allowing us to understand the explosive death of stars, and this study shows that such models are needed to figure out new ways to measure dark energy.”

Taking Supernova Hunting to the Extreme

When LSST begins full survey operations in 2023, it will be able to scan the entire sky in only three nights from its perch on the Cerro Pachón ridge in north-central Chile. Over its 10-year mission, LSST is expected to deliver over 200 petabytes of data. As part of the LSST Dark Energy Science Collaboration, Nugent and Goldstein hope that they can run some of this data through a novel supernova-detection pipeline, based at NERSC.

For more than a decade, Nugent’s Real-Time Transient Detection pipeline running at NERSC has been using machine learning algorithms to scour observations collected by the Palomar Transient Factor (PTF) and then the Intermediate Palomar Transient Factory (iPTF) – searching every night for “transient” objects that change in brightness or position by comparing the new observations with all of the data collected from previous nights. Within minutes after an interesting event is discovered, machines at NERSC then trigger telescopes around the globe to collect follow-up observations. In fact, it was this pipeline that revealed the first-ever strongly lensed Type Ia supernova earlier this year.

“What we hope to do for the LSST is similar to what we did for Palomar, but times 100,” says Nugent. “There’s going to be a flood of information every night from LSST. We want to take that data and ask what do we know about this part of the sky, what’s happened there before and is this something we’re interested in for cosmology?”

He adds that once researchers identify the first light of a strongly lensed supernova event, computational modeling could also be used to precisely predict when the next of the light will appear. Astronomers can use this information to trigger ground- and space-based telescopes to follow up and catch this light, essentially allowing them to observe a supernova seconds after it goes off.

“I came to Berkeley Lab 21 years ago to work on supernova radiative-transfer modeling and now for the first time we’ve used these theoretical models to prove that we can do cosmology better,” says Nugent. “It’s exciting to see DOE reap the benefits of investments in computational cosmology that they started making decades ago.”

The SciDAC partnership project – Computational Astrophysics Consortium: Supernovae, Gamma-Ray Bursts, and Nucleosynthesis – funded by DOE Office of Science and the National Nuclear Security Agency was led by Stan Woosley of UC Santa Cruz, and supported both Nugent and Kasen of Berkeley Lab.

NERSC is a DOE Office of Science User Facility.

###

Lawrence Berkeley National Laboratory addresses the world’s most urgent scientific challenges by advancing sustainable energy, protecting human health, creating new materials, and revealing the origin and fate of the universe. Founded in 1931, Berkeley Lab’s scientific expertise has been recognized with 13 Nobel Prizes. The University of California manages Berkeley Lab for the U.S. Department of Energy’s Office of Science. For more, visit www.lbl.gov.

DOE’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.