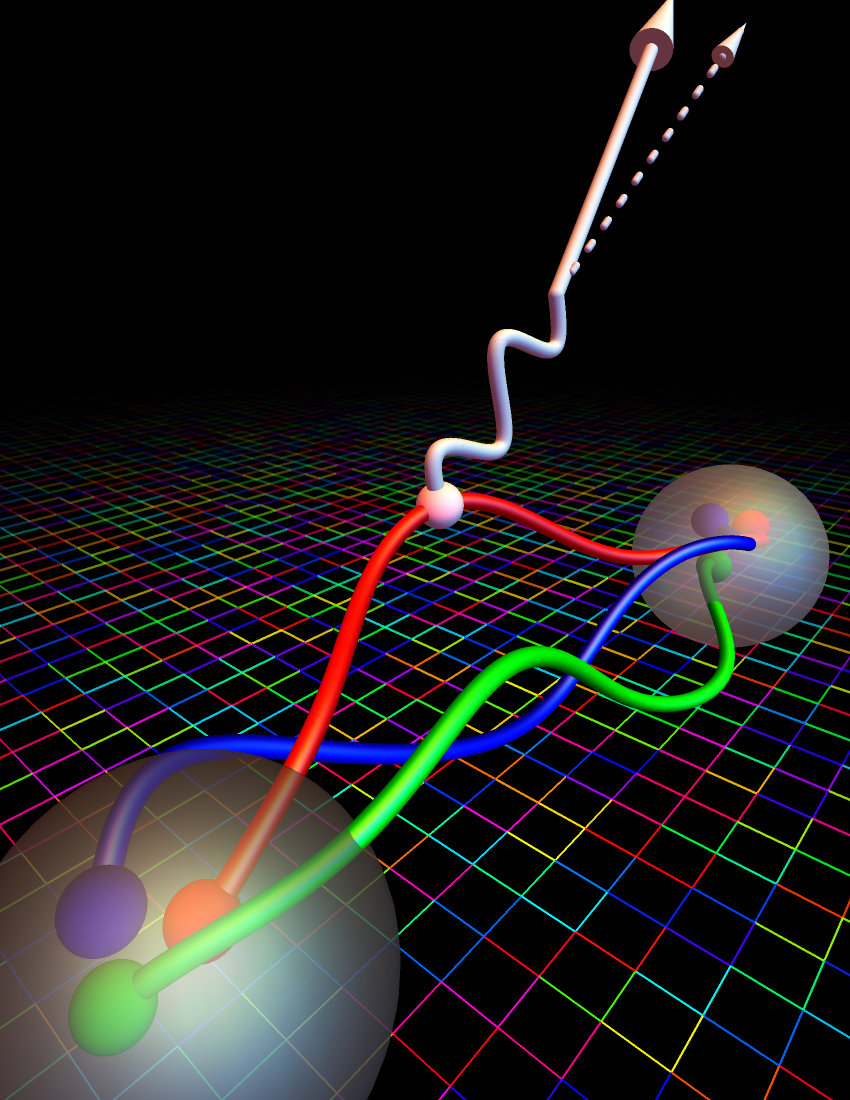

In this illustration, the grid in the background represents the computational lattice that theoretical physicists used to calculate a particle property known as nucleon axial coupling. This property determines how a W boson (white wavy line) interacts with one of the quarks in a neutron (large transparent sphere in foreground), emitting an electron (large arrow) and antineutrino (dotted arrow) in a process called beta decay. This process transforms the neutron into a proton (distant transparent sphere). (Credit: Evan Berkowitz/Jülich Research Center, Lawrence Livermore National Laboratory)

This article is adapted from a press release prepared by Oak Ridge National Laboratory. View the original release.

– By Katie Elise Jones

André Walker-Loud, a staff scientist at the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab), is co-leader of a team that is among the six finalists for the Association of Computing Machinery’s Gordon Bell Prize that will be awarded this month.

The team that Walker-Loud led with Pavlos Vranas of DOE’s Lawrence Livermore National Laboratory (LLNL) has improved the ability to compute the precise duration of the neutron lifetime using the latest generation of DOE supercomputers, including the 200-petaflop Summit supercomputer at DOE’s Oak Ridge National Laboratory (ORNL) and the 125-petaflop Sierra supercomputer at LLNL.

Walker-Loud’s team accelerated the scientific application of these simulations 10-fold on Sierra and 15-fold on Summit compared to previous scientific runs on ORNL’s 27-petaflop Titan supercomputer.

“New machines like Sierra and Summit are disruptively fast,” Walker-Loud said. “As we move toward exascale, job management is becoming a huge factor for success. With systems like Sierra and Summit, we will be able to run hundreds of thousands of jobs and generate several petabytes of data in a few days – a volume that is too much for the current standard management methods.”

The team submitted a paper, “Simulating the weak death of the neutron in a femtoscale universe with near-exascale computing,” for consideration by the ACM Gordon Bell Prize Committee. The prize winner will be announced at the 2018 International Conference for High Performance Computing, Networking, Storage, and Analysis, or SC18, in Dallas on November 15.

Simulations could help unlock universe’s mysteries

There is a fine line between particle physics and nuclear physics at which the subatomic particles quarks and gluons first join into protons and neutrons then into atomic nuclei.

On one side of this line is the universe as it should be according to the Standard Model of particle physics: nearly devoid of matter and filled with leftover radiation from the mutual destruction of matter and antimatter. On the other side of this line is the universe as we observe it: space-time speckled with matter in the form of galaxies, suns, and planets.

To understand the asymmetry between matter and antimatter, scientists are using massive supercomputers in the search for new physics discoveries. Through a sophisticated numerical method known as lattice quantum chromodynamics (QCD), scientists calculate the interactions of quarks and gluons on a lattice of space-time to study the emergence of nuclei from the fundamental physics theory of QCD. By bridging the studies of particle interactions and atomic nuclei, lattice QCD simulations are also an entry point for learning much more about how the universe works.

Simulating more space-time

From left: David Brantley, André Walker-Loud, Pavlos Vranas, Henry Monge-Camacho, Thorsten Kurth, and Chia Cheng “Jason” Chang – pictured here at Berkeley Lab – participated in an international team that calculated the nucleon axial coupling, a property important to understanding the neutron lifetime. (Credit: Marilyn Chung/Berkeley Lab)

Earlier this year, Walker-Loud’s team solved an important calculation related to neutron lifetime at a new level of precision on the Titan supercomputer at the Oak Ridge Leadership Computing Facility (OLCF), a DOE Office of Science User Facility at ORNL that is also home to Summit, and on the supercomputers at LLNL. Researchers calculated the nucleon axial coupling — a fundamental property of protons and neutrons — at 1 percent precision (a very low margin of error). The research community had predicted this level of precision would not be possible until 2020, but Walker-Loud’s team was able to reduce the amount of statistics needed to complete the calculation by a factor of 10 using its improved physics algorithm.

“The lifetime of a neutron, which is about 15 minutes, is important because it has a profound effect on the mass composition of the universe,” said Pavlos Vranas of LLNL.

For the Gordon Bell Prize submission, researchers wanted to demonstrate that they can extend this accomplishment on Summit and Sierra by increasing the size of the space-time lattice and reduce uncertainty in future projects.

Increasing lattice size in lattice QCD calculations is also a long-term goal of the nuclear physics community so that researchers can routinely model light nuclei, such as deuterium or helium, directly from QCD — problems that are even more complex and challenging to simulate than the neutron lifetime.

On Summit, researchers modeled a lattice with 64 sites in each spatial direction and 96 in time. Each site is separated from the others by only 0.09 femtometers (a femtometer is 1 quadrillionth of a meter). Although the total size of the simulated universe is a mere 5.6 femtometers across, it is large enough to study the weak death of the neutron and reduce uncertainty in calculations. Walker-Loud said this lattice is the smallest lattice the team projects they will need to improve their calculations, and systems like Sierra and Summit will enable the use of significantly larger lattices.

One of the most significant challenges for researchers is distributing their calculations into many pieces.

“Our science problem is a statistical one that requires running many thousands to millions of small jobs, but that is not an efficient way to deploy jobs on a large supercomputer,” Walker-Loud said.

The overall efficiency of the calculations is achieved with QUDA, a QCD library optimized for GPUs and developed by Kate Clark and colleagues of NVIDIA and the lattice QCD research community. QUDA is integrated into the Chroma code, developed by Bálint Joó of Jefferson Lab and others working with the USQCD collaboration to develop lattice QCD calculations for large-scale computers. The team wrapped their own code with these optimized libraries into lalibe to connect with Chroma. Lalibe development is led by Arjun Gambhir of LLNL.

Lattice QCD researchers have been taking advantage of GPUs for several years, but Summit and Sierra boost computational power by linking multiple GPUs on a single node with local memory rather than pairing a single GPU with a single CPU.

“The GPUs on the node have much faster communication bandwidth than node-to-node communication. We’re tackling bigger problems on a smaller number of nodes,” Walker-Loud said. “Based on these Gordon Bell runs, it would only take 2 weeks to generate 6 petabytes of data. This is unmanageable with our current production models.”

In order to manage the anticipated factor-of-10 growth in the number of jobs and data, the team has been upgrading their Bash manager METAQ, developed by Evan Berkowitz of Jülich Research Centre, to a C++ version called MPI_JM. The development of MPI_JM is led by Ken McElvain of the University of California, Berkeley.

Both METAQ and MPI_JM managers are a middle layer between the system’s batch scheduler and the application’s job scripts that enable the team to more effectively bundle hundreds of thousands of tasks into a few hundred jobs that run one after the other.

On a supercomputer with more than 4,000 nodes, splitting a job between nodes on opposite sides of the room frays performance by wasting precious communication time. The MPI_JM library sorts the nodes to maximize the probability that tasks will be placed on adjacent nodes on the system.

In addition to managing these jobs more effectively, MPI_JM allows users to place “CPU-only” tasks on the same compute nodes as GPU-intense work without the tasks interfering with each other. For calculations of the neutron lifetime, this can save 10 to 20 percent of the project’s computing time as the CPU-only tasks previously occupied the entire node. For more complex calculations in the future, the savings may be a factor of two or more.

The team scaled to 4,224 nodes on Sierra with MPI_JM and 1,024 nodes on Summit with METAQ, achieving 20 petaflops on the larger Sierra run — a notable performance leap for this type of lattice QCD calculation. The team projected scaling to as many nodes on Summit could have achieved a performance of up to 30 petaflops.

Using MPI_JM on Summit, the team also demonstrated splitting GPUs on the nodes so that four of six GPUs per node could be assigned to work on one task while the remaining two GPUs could work on a different task. For problems that do not divide evenly by three, this will allow MPI_JM users to utilize all the GPUs on the nodes simultaneously.

Both METAQ and MPI_JM were developed to be independent of the underlying application, so any user of Summit and Sierra should, in principle, be able to integrate this management software into their workflow management.

“MPI_JM has provided significant improvements over our METAQ manager. We were able to get 4,224 nodes on Sierra performing real work within five minutes when compiled with MVAPICH2,” Walker-Loud said. “The fact that we have an extremely fast GPU code (QUDA) and were able to wrap our entire lattice QCD scientific application with new job managers we wrote (METAQ and MPI_JM) got us to the Gordon Bell finalist stage, I believe.”

More info:

- Supercomputers Provide New Window Into the Life and Death of a Neutron

- 5 Gordon Bell Finalists Credit Summit for Vanguard Computational Science

###

Lawrence Berkeley National Laboratory addresses the world’s most urgent scientific challenges by advancing sustainable energy, protecting human health, creating new materials, and revealing the origin and fate of the universe. Founded in 1931, Berkeley Lab’s scientific expertise has been recognized with 13 Nobel Prizes. The University of California manages Berkeley Lab for the U.S. Department of Energy’s Office of Science. For more, visit www.lbl.gov.

DOE’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit science.energy.gov.