Key Takeaways

- Radiation mapping can be used to improve safety at sites with radioactive sources (such as power plants or hospitals), enforce non-proliferation agreements, or guide environmental cleanup and disaster response

- Scientists at Berkeley Lab have created multi-sensor systems that can map nuclear radiation in 3D in real time and are now testing how to integrate their system with robots that can autonomously investigate radiation areas

- Smarter robots could help human operators assess difficult-to-access environments and reduce risk from hazards including radiation exposure and damaged infrastructure

In 2013, researchers carried a Microsoft Kinect camera through houses in Japan’s Fukushima Prefecture. The device’s infrared light traced the contours of the buildings, making a rough 3D map. On top of this, the team layered information from an early version of a hand-held gamma-ray imager, displaying the otherwise invisible nuclear radiation from the Fukushima Daiichi Nuclear Power Plant accident.

This month, scientists at the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) are teaching a robotic dog to intelligently hunt out radiological material using a self-contained suite of sensors on its back. It’s fair to say radiation mapping has come a long way.

“It can take a long time to see improvement in radiological technology like gamma-ray detectors, so we’re defining the state-of-the-art by leveraging other sensor types,” said Ren Cooper, deputy head of Berkeley Lab’s Applied Nuclear Physics (ANP) program. “It’s not just nuclear physics – it’s robotics, computer vision, software, and other elements coming together that enable societal benefits.”

Those applications include improved nuclear safety by monitoring radioactive sources used at power plants, particle accelerators, or in hospitals; nuclear security and non-proliferation efforts; environmental cleanup and remediation; and emergency response to disasters.

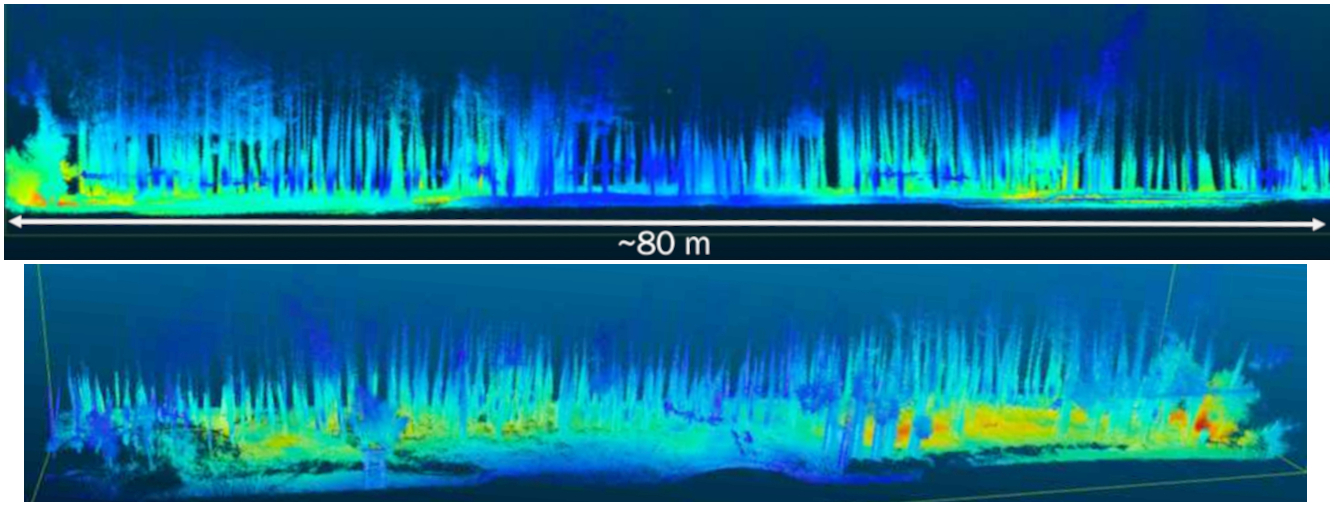

Since their Kinect days, Berkeley Lab researchers have combined more and more sensors for mapping radiation. They’ve integrated video camera feeds, LIDAR (light detection and ranging), inertial measurement units (such as gyroscopes and accelerometers), and particle detectors into self-contained systems with power and onboard computing. In a deceptively simple technique called “scene data fusion,” that massive amount of information from multiple sources is crunched into one image.

Researchers used photographs to make a model of a crane claw near the Chernobyl Nuclear Power Plant in Ukraine. Gamma-ray data overlaid on the claw, an example of “scene data fusion,” shows the most radioactive parts (in red).

“What we are now able to do with our systems is quite revolutionary: we’re mapping the world in three dimensions and in real time,” said Kai Vetter, a professor at UC Berkeley and the founder and head of ANP at Berkeley Lab. He and several grad students collaborated with the Japan Atomic Energy Agency to map houses in Fukushima. “It’s an extremely powerful way to look at the environment and make decisions, because we have this tool that can visualize radiation anywhere.”

These systems can already be carried by hand through rough terrain, flown by a drone through open areas, or strapped to a robot that can maneuver inside a building. Now researchers are building off these capabilities to enable more independent robotic actions, such as investigating hotspots or finding the limits of a radiation area.

Teaching a robotic dog new tricks

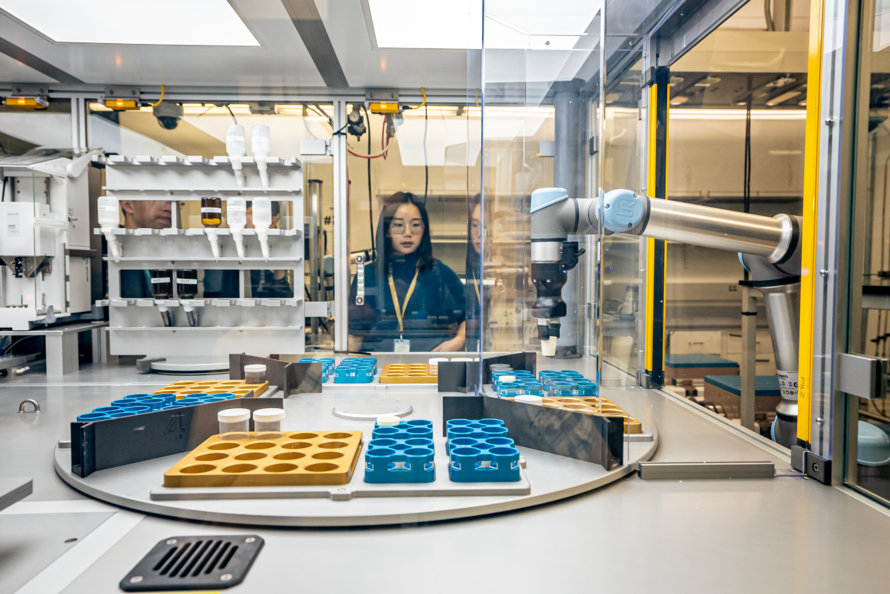

Strapping a radiation detection system to a robotic dog isn’t hard. It takes Berkeley Lab scientist Brian Quiter about two minutes to attach a Localization and Mapping Platform (LAMP) to the Spot robotic dog from Boston Dynamics. Integrating the two systems, so that Spot gets the radiation data and knows how to make smart choices, is another story.

A huge factor is teaching the dog what objects are and how to respond to them, a process in computer vision known as “semantic segmentation.”

“If you had a radioactive source on the other side of a wall, the semantically ignorant dog would come up and say, ‘Hey, this wall is radioactive,’” said Quiter, who is also a deputy head of ANP. “A semantically smart dog will say, ‘It’s radioactive over here – I want to see what’s on the other side of the wall.’ Right now, there is no mechanism by which to do that. If we can incorporate this knowledge into our algorithms, I think that will be a big deal. It will improve the efficacy of automated radioactivity mapping.”

With that kind of programming, an autonomous robot could intelligently map out contaminated objects and areas for clean-up. Or it could walk down a hallway, identify waste canisters that are supposed to have a particular radiation signature, and immediately gather additional data to investigate any anomalies.

“Making good measurements takes time – and robots can make good measurements,” Quiter said. “That frees inspectors or operators up to do other things and means that the robot can take the radiation dose rather than the human, so everyone wins. A robot can take a lot more radiation.”

The future of rad maps

Radiation mapping has evolved over the past decade, but there are still areas researchers would like to improve. Current systems are good at making maps of relative amounts of radiation in an area and picking out hotspots, but forming an actual map of expected radiation doses from a more limited measurement is an area of active improvement.

Spot carries a LAMP system during testing. (Credit: Thor Swift/Berkeley Lab)

“Quantitative readings are no easy task because the world is very complex,” Vetter said. “With radiation, the distance of readings matters, and the amount of shielding in the way matters. A lot of that has to be inferred by the measurement we do, but the advantage we have now is that we’re obtaining a lot of information about our environment using machine learning and machine vision.”

One approach is to develop new algorithms that can incorporate even more of the information that sensors collect. For example, scientists have seen promising early results from new imaging algorithms that can generate maps using the full range of energies measured by gamma ray detectors. (Previous methods were tied to a smaller, more limited energy range.)

Systems to map radiation may also find applications in new areas in the future. Researchers could potentially use them for critical materials recovery, a way to look for geologically interesting materials that former mine sites were throwing away because they weren’t deemed valuable at the time – lithium for electric cars, for example.

“That’s a pretty large problem, and one that we hope to be able to help address,” Quiter said.

Radiation mapping could also potentially help teams find some of the millions of landmines buried around the world. While landmines are not radioactive, researchers are investigating how a technique called “active probing” using neutrons might cause the landmines to emit gamma rays they could then detect. Vetter can also imagine using the technology to monitor the health of spacecraft and astronauts on longer journeys where they are exposed to cosmic radiation.

“It’s exciting where we are today, with this enormous advance in technologies that allows us to map the world in real time,” Vetter said. “But it’s also exciting to see what is still out there to do, and what will come in the future.”

Scene data fusion was recently called out in the 2023 Long-Range Plan for Nuclear Physics released by the Department of Energy’s Nuclear Science Advisory Committee as an example of a nuclear science application.

The work integrating LAMP and Spot is supported by DOE’s National Nuclear Security Administration (NNSA).

###

Founded in 1931 on the belief that the biggest scientific challenges are best addressed by teams, Lawrence Berkeley National Laboratory and its scientists have been recognized with 16 Nobel Prizes. Today, Berkeley Lab researchers develop sustainable energy and environmental solutions, create useful new materials, advance the frontiers of computing, and probe the mysteries of life, matter, and the universe. Scientists from around the world rely on the Lab’s facilities for their own discovery science. Berkeley Lab is a multiprogram national laboratory, managed by the University of California for the U.S. Department of Energy’s Office of Science.

DOE’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit energy.gov/science.