As Sarai Finks was sorting through datasets of bacterial genome sequences last year, she became frustrated by missing information. Finks studies how dietary changes affect the communities of bacteria-infecting viruses that live in our guts, and how these changes in turn affect our health. She needed to know more about the microbiome samples represented in the datasets – in particular, more details about the human environments they came from. Where did the person live? What kind of foods did they eat? What did they drink? The lack of specifics made it difficult for Finks to connect all the dots about the organisms and how they interact with other microbes as the gut conditions shift due to diet.

On one hand, the mere existence of a wealth of biological data that Finks did not have to generate herself was a boon. On the other hand, the data was a mess – inconsistent and incomplete.

Finks’ moment of frustration is familiar to any researcher who studies microbial communities, also known as microbiomes. Understanding microbiomes gives us insight into big topics like the origins of disease and carbon sequestration in the soil, as well as answering intriguing questions, like how life thrives in the dark depths of the ocean. To discover which organisms are in a given microbiome and what each of these inhabitants are doing, scientists gather samples and analyze the DNA, RNA, and proteins within; sometimes going as far as trying to identify every organic compound that is present. These studies generate giant datasets of molecular information and genetic sequences that are all different in their organization, style and language of notation, and underlying software, depending on the team who created them.

Other researchers may benefit greatly from this data, especially those without the time or resources to perform original sample analyses, but actually using it can feel like trying to read an encyclopedia written in a different language, with pages missing.

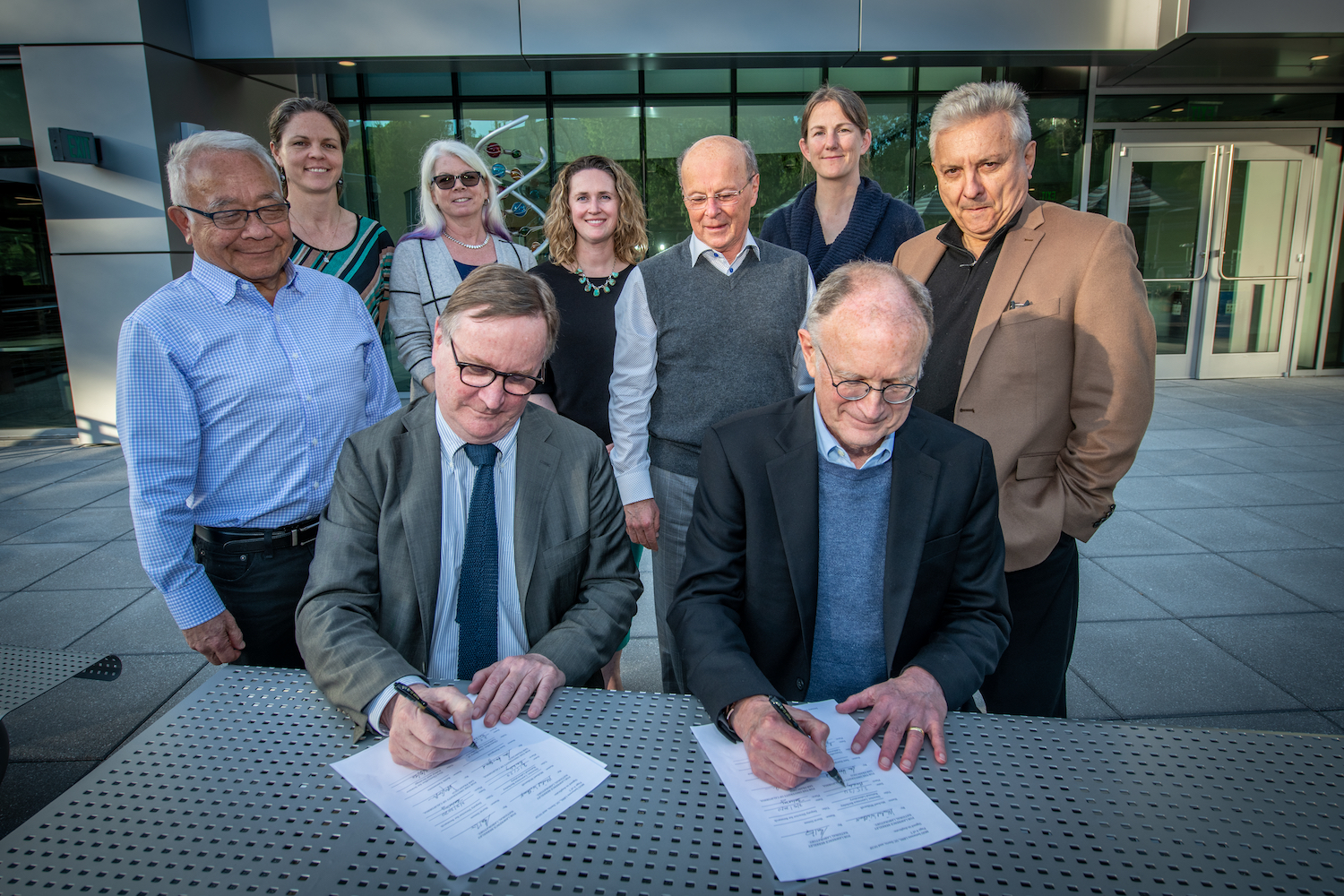

“Data standards in microbiome research are critical to enable cross-study comparison, share and reuse data, and to build upon existing knowledge of what microbes do in their environments and where they occur,” said Emiley Eloe-Fadrosh, Program Lead of the National Microbiome Data Collaborative (NMDC). The NMDC was founded in 2019 by a diverse group of experts – with funding from the Department of Energy – to help address the ongoing data challenges through community engagement and the creation of new tools and standardized practices. The NMDC is led by Eloe-Fadrosh and scientists from Lawrence Berkeley National Laboratory (Berkeley Lab), Los Alamos National Laboratory, and Pacific Northwest National Laboratory.

“Without standards for how samples are collected or processed, researchers are left with having to contact the primary research team to get more information, spend significant time curating and re-processing data to be consistent for downstream analyses, or simply not use that data,” said Eloe-Fadrosh.

Since its inception, the NMDC has launched two online platforms that enable data sharing and searching, and developed a bioinformatics software system for data processing called NMDC EDGE. However, as impactful as these tools might be, the NMDC leaders know that true change in the field will also require a shift in the scientific culture.

In 2021, the NMDC Ambassador Program was launched with the goal of training early career scientists with diverse research interests in best practices for biological data standardization, and equipping them with the resources and experience needed to share their new skills. The Ambassadors can then train peers at their home institutions and beyond by hosting their own events and workshops.

The team believes this community-driven learning model will spread the principles of Findable, Accessible, Interoperable, and Reusable (FAIR) data across the country. “We wanted to get away from the idea of ‘build it and they will come.’ We work closely with early career researchers who are the ones on the front-line doing research,” said Eloe-Fadrosh.

After navigating the logistical challenges of the early pandemic, the team recruited 12 Ambassadors from universities, national laboratories, and government agencies for the pilot program. Between 2021 and 2022, these ambassadors gave presentations to more than 800 researchers.

The program is now in its second cohort cycle, with 13 ambassadors. Sarai Finks is one of them. “My motivation for getting involved began with my own experiences dealing with the challenges of incomplete metadata from publicly available whole genome sequencing datasets and learning more about how I could better my own practices. I was also motivated to become involved through my awareness of barriers to accessing computational resources needed for working with multi-omics datasets,” said Finks, referring to the combination of gene (genomic), RNA (transcriptomic), protein (proteomic), and other cell-made molecule (metabolomic) datasets that are analyzed to understand microbial activity. “Not every researcher has access to high performance computing facilities or the skillsets often required to implement programs needed for analyses of multi-omics data.”

Overwhelming omics

Ishi Keenum, an assistant professor at Michigan Tech University, is another 2023 Ambassador. She researches how genes that give microbes antibiotic resistance are shared in human-built systems like wastewater treatment streams. “I use bioinformatics to study how resistance genes are changing in the microbiomes in these environments, and then look at different ways that we can mitigate resistance genes spreading,” she said.

Keenum has had her share of challenging moments navigating non-standardized data, but the issue became more urgent in the early days of the pandemic, when she and her colleagues wanted to continue their work but couldn’t go out into the field to collect new samples to generate data. Keenum turned to datasets available in the published literature, hoping to perform some meta-analyses, but was stymied by the use of different data repositories. And once she finally found omics data relevant to her research, the terminology used in the metadata was often unclear to the point that she wasn’t confident where and how the samples had been collected.

“The words that we use can mean very different things, and there was just a lot of frustration to try to figure out what people had sequenced,” said Keenum. “I had to individually email a lot of people and many didn’t respond. So, a lot of data had to get eliminated from our study.”

Now, as an NMDC Ambassador, she is excited to help build the scientific culture of the future, where FAIR data is the norm. She reports seeing an appetite for change among her fellow early career colleagues when she presents on the topic.

Modern bioinformatics tools and software have made it possible to quickly generate huge omics datasets that would have taken months or years to generate in the past. It is therefore quite common to spend a lot of time during Ph.D. and postdoctoral research working with big data. “I think a lot of us got to the point where we’re pretty good at bioinformatics, and we want to see it unleashed in the world. We can say, okay, I did this in my study, what does everyone else see? Can we like look at a million studies all at once?” said Keenum. “And we’re now at the point where, technologically, you really can do that, if the data is set up for it. So, we all see what happens when you don’t have the information captured well enough to do that, and how painful that can be.”

Training the next generation of trainers

The NMDC team published an article in Nature Microbiology detailing the program’s achievements thus far. They have also begun planning for a 2024 cohort. They hope to offer a similar curriculum but will ensure that the program evolves based on insights from this year. Following the pilot program, the leaders realized that researchers would also benefit from best practices for data collection, not just downstream data management. The 2023 group then received comprehensive training and toolkits that can be applied to the entire research workflow.

Feedback from the cohorts is also helping the NMDC team to improve the resources they offer to the entire research community: the Submission Portal, where scientists can submit sample data; the Data Portal, which provides powerful search capabilities for cross-study comparisons of multi-omics data; and NMDC EDGE, a user-friendly bioinformatics platform that supports data processing and also allows non-bioinformaticians to access high-performance computing systems for their own omics analyses.

“The most gratifying has been to work closely with the Ambassadors to learn directly from them about how we can improve our tools. I also love to showcase the diverse research they are doing to a broad audience,” said Eloe-Fadrosh.

A FAIR Future

There are already clear examples of the major scientific advances that can be enabled by data standardization. Perhaps the best known is the Human Genome project, which involved 20 institutions working together for 13 years to sequence the human genome, using tools that are considerably slower than those we have today. This massive undertaking has led to a better understanding of nearly all aspects of human health and aided the development of new medicines – and it was made possible by a shared commitment to developing and using open-access tools and FAIR data practices.

Similarly, recent investigations and comparisons of environmental microbiomes across ecosystems that were enabled by data standardization have provided critical information for climate modeling, water quality management, food production, and biotechnological innovations.

Though already quite apparent, the benefits of standardization are on track to grow exponentially in the coming years, as new artificial intelligence (AI) tools have demonstrated amazing capabilities for quickly synthesizing new theories and discoveries out of large datasets. By pairing these emerging tools with FAIR datasets created and shared by teams across the world, researchers like Finks and Keenum will be able to generate biological breakthroughs we can’t even imagine today.

###

Lawrence Berkeley National Laboratory (Berkeley Lab) is committed to delivering solutions for humankind through research in clean energy, a healthy planet, and discovery science. Founded in 1931 on the belief that the biggest problems are best addressed by teams, Berkeley Lab and its scientists have been recognized with 16 Nobel Prizes. Researchers from around the world rely on the Lab’s world-class scientific facilities for their own pioneering research. Berkeley Lab is a multiprogram national laboratory managed by the University of California for the U.S. Department of Energy’s Office of Science.

DOE’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States, and is working to address some of the most pressing challenges of our time. For more information, please visit energy.gov/science.

New Partnership Seeds Microbiome Research

A Community-Driven Data Science System to Advance Microbiome Research